Shifting Down In Kubernetes

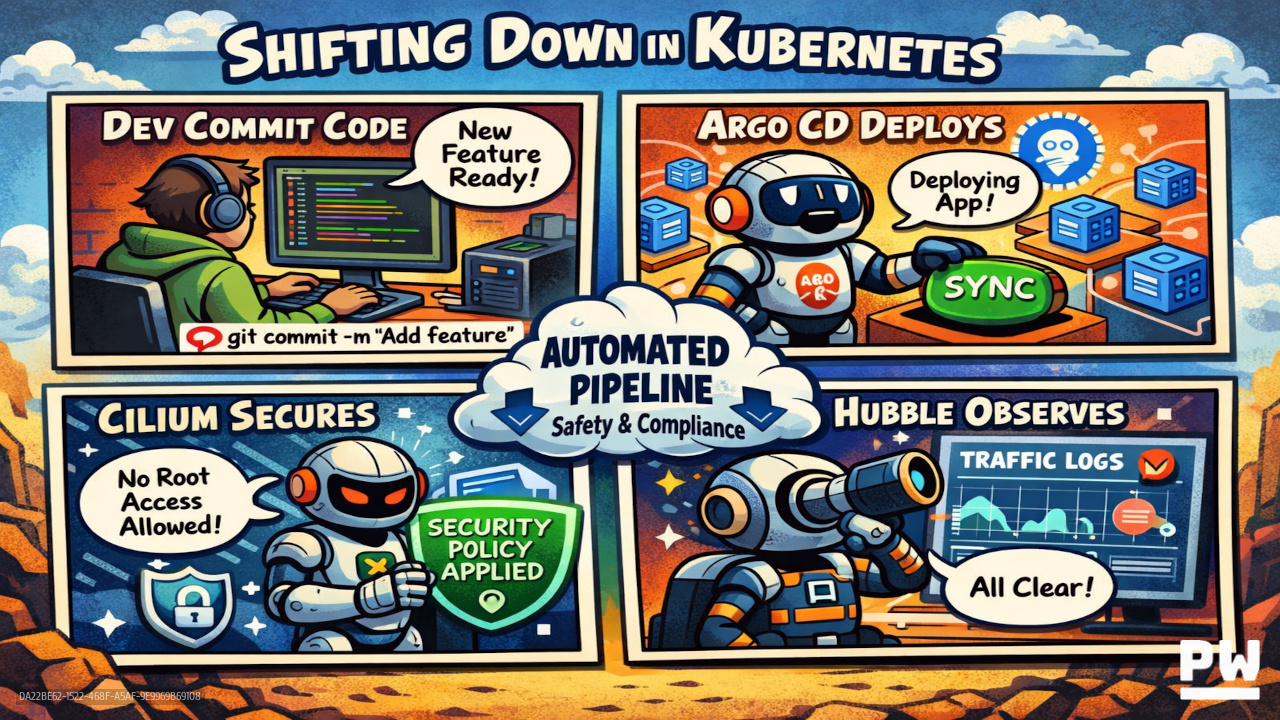

Following up on my previous post on shifting down, I want to expand on the process of implementing shifting down with some simple examples. Shifting down in a Kubernetes environment is a strategic approach to reducing operational risk, accelerating delivery, and enabling teams to scale without a corresponding increase in complexity or headcount. Rather than relying on human processes, specialized expertise, or manual oversight, shifting down embeds reliability, security, and operational best practices directly into the platform itself. The journey to a mature shift-down platform typically unfolds across four phases: standardization, abstraction, productization, and governance. I wanted to build on my previous post with some practical examples of implementing shifting down in a Kubernetes environment. I have included a few high level examples with common tools. Shifting down in your environment may look completely different.

Standardization

Standardization is the foundation of shifting down. Its primary goal is to eliminate inconsistency by ensuring that clusters, environments, and workloads are created and operated in the same way every time. A common starting point is standardized cluster bootstrapping using GitOps with Argo CD to install core components like Cilium.

# Argo CD Application for installing Cilium

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: cilium

namespace: argocd

spec:

project: platform

source:

repoURL: https://helm.cilium.io/

chart: cilium

targetRevision: 1.15.x

helm:

values:

hubble:

enabled: true

kubeProxyReplacement: true

destination:

server: https://kubernetes.default.svc

namespace: kube-system

syncPolicy:

automated:

prune: true

selfHeal: trueStandardization also applies to workload fundamentals, such as baseline CiliumNetworkPolicies that deny all traffic by default to enforce a zero-trust posture.

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: default-deny

namespace: orders

spec:

endpointSelector: {}

policyTypes:

- Ingress

- EgressAbstraction

Abstraction builds on standardization by reducing the Kubernetes knowledge required by application teams. The platform encapsulates configuration into reusable patterns delivered through GitOps workflows, often using opinionated Helm values.

# values.yaml consumed by a platform Helm chart

service:

name: orders-api

image: registry.example.com/orders:1.4.2

port: 8080

public: falseNetworking complexity is similarly abstracted through Cilium’s identity-based networking, where access is expressed via labels rather than brittle IP addresses.

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: allow-orders-to-payments

namespace: payments

spec:

endpointSelector:

matchLabels:

app: payments

ingress:

- fromEndpoints:

- matchLabels:

app: ordersProductization

Productization treats the platform as an intentional, user-focused product. In this phase, the platform team provides a Gateway API resource that Cilium's control plane manipulates to enable precise, percentage-based traffic shifting.

# Argo Rollouts example with Cilium Gateway API traffic shifting

apiVersion: argoproj.io/v1alpha1

kind: Rollout

metadata:

name: orders-api

namespace: orders

spec:

replicas: 5

strategy:

canary:

trafficRouting:

gatewayAPI:

httpRouteName: orders-api-route

steps:

- setWeight: 20

- pause: { duration: 5m }The platform-managed HTTPRoute acts as the networking bridge, allowing Cilium to orchestrate the traffic split between stable and canary versions.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: orders-api-route

namespace: orders

spec:

parentRefs:

- name: cilium-gateway

namespace: cilium-ingress

rules:

- matches:

- path: { type: PathPrefix, value: /api/orders }

backendRefs:

- name: orders-api-stable

port: 8080

weight: 100

- name: orders-api-canary

port: 8080

weight: 0One of the greatest challenges in Kubernetes is observability friction—the need for developers to instrument their code or manage sidecars just to see if their services are talking to each other. By shifting observability down into the platform with Hubble, visibility becomes a transparent infrastructure property rather than an application-level burden.

Unlike traditional service meshes that require a sidecar proxy in every pod, Hubble leverages eBPF to observe traffic directly from the Linux kernel. This means there is zero sidecar tax. No additional CPU or memory overhead is needed for application pods and developers do not need to modify their deployment manifests. Observability is simply there, provided by the platform as a background utility.

Because Hubble is deeply integrated with the Kubernetes control plane, it provides contextual data that traditional network tools lack. Instead of forcing developers to map cryptic IP addresses to services, Hubble provides visibility in the language of the platform: pod names, namespaces, and labels. This identity-aware approach ensures that when a developer looks at a trace, they see exactly which service is communicating, regardless of how many times a pod has been rescheduled or its IP address has changed.

With these platform-level insights, application teams can troubleshoot connectivity issues in seconds using the Hubble CLI.

# Observe dropped traffic in the 'orders' namespace with full identity context

hubble observe --namespace orders --verdict DROPPED

# Example Output showing pod names instead of IPs:

# Aug 3 15:23:40.501: orders/orders-api-v1-767cf -> payments/payments-api:8080 TCP Flags: SYN DROPPED (Policy denied)For a high-level view, the Hubble UI provides an automatically generated service map. This visualizes the service dependencies and traffic flows defined in the Abstraction phase, providing a lliving document of the cluster's architecture. By shifting observability down, we move from "I hope this is secure" to "I can see it is secure." This ensures that the standardized and governed platform is also a fully transparent one, closing the loop on the shift-down journey.

Governance

Governance ensures the platform remains secure and compliant through automated guardrails. Argo CD AppProjects restrict where teams can deploy, while Cilium enforces runtime security, such as restricting egress to specific ports.

# Enforcing port-level egress governance

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: restrict-egress

namespace: orders

spec:

endpointSelector: {}

egress:

- toPorts:

- ports:

- port: "443"

protocol: TCPShifting down is a deliberate evolution. By progressing through these four phases, organizations move security, reliability, and cost control into the platform itself. With tools like Cilium, Argo CD, and Hubble, these concerns become default behaviors rather than burdens on individual teams.